In the high-stakes race towards autonomous driving, a Tesla's ability to perceive a threat beyond human sensory range marks a significant leap. A recent real-world demonstration, captured on video by an owner, shows Tesla's Full Self-Driving (FSD) system proactively moving the vehicle to yield for an approaching emergency vehicle—a full three seconds before the driver could audibly detect the siren. This incident isn't just a neat party trick; it's a tangible example of how Tesla's vision-based artificial intelligence is evolving to create a predictive safety net, potentially rewriting the playbook for how vehicles interact with emergency services.

AI Vision Outpaces Human Hearing

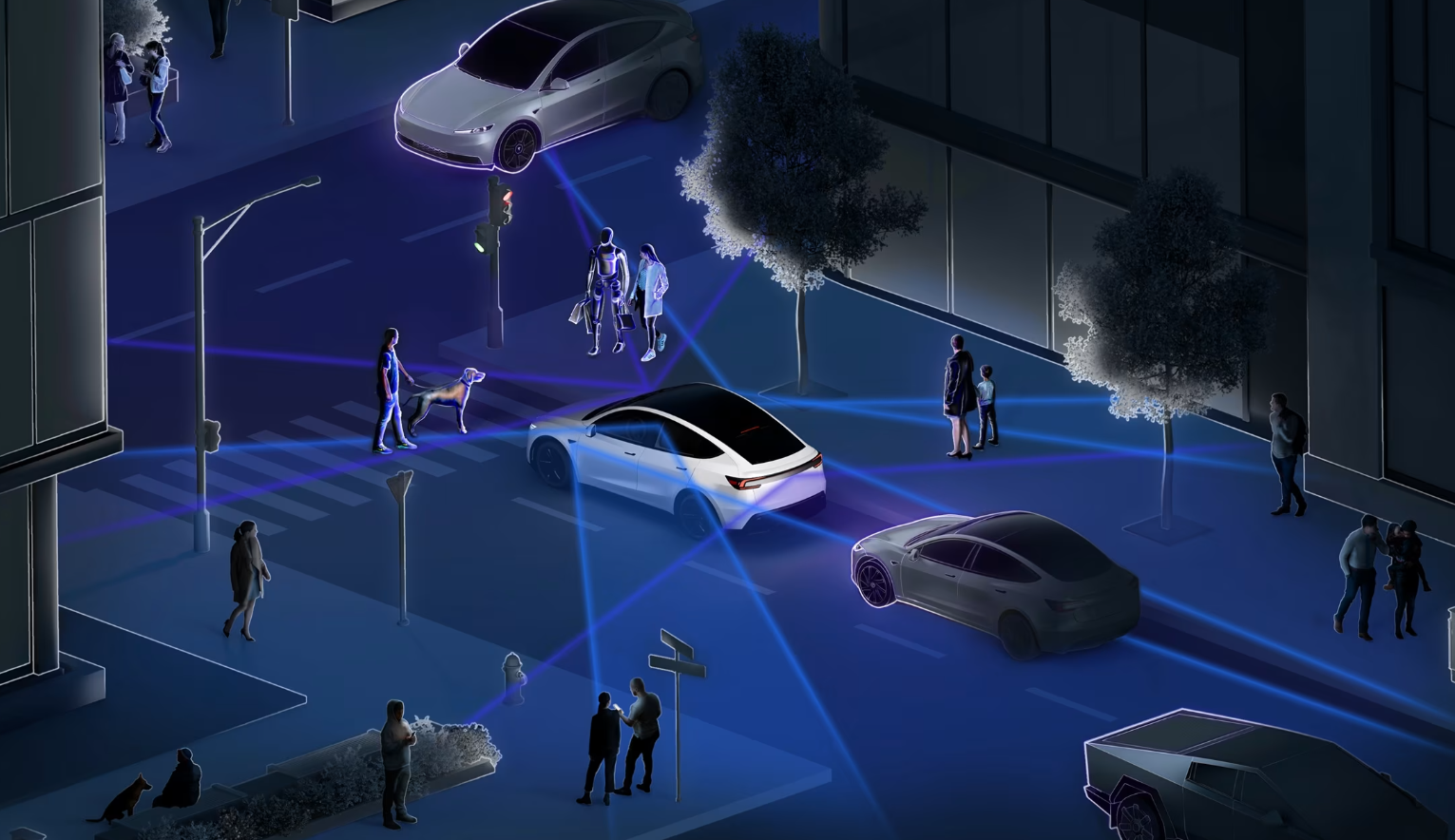

The core of this event lies in the capabilities of Tesla's AI Vision system. Relying solely on camera inputs and neural network processing, the vehicle identified the visual patterns of the approaching ambulance—likely its flashing lights—and initiated the yielding maneuver. The critical detail is the timing: the system reacted to visual cues before auditory ones reached the cabin. This demonstrates a level of environmental awareness that extends beyond human limitations, where obstructions, wind noise, or audio systems can delay a driver's reaction. It underscores FSD's design philosophy: to serve as a vigilant co-pilot that processes multiple data streams simultaneously to make defensive decisions.

Beyond Vision: The Role of Acoustic Processing

While the visual system took the lead in this instance, Tesla has quietly integrated another layer of sensing. Since 2021, Tesla vehicles have been equipped with an external microphone array as part of its "Boombox" feature. Tesla has confirmed this system is also used to help detect emergency sirens. In scenarios where an ambulance is obscured from view—around a blind corner or blocked by traffic—the acoustic detection provides a crucial secondary confirmation. This multi-modal approach, combining cutting-edge computer vision with targeted audio processing, creates a more robust and fail-safe method for recognizing emergency scenarios, a non-negotiable requirement for any autonomous system.

Implications for Safety and Regulatory Trust

For Tesla owners and investors, this evolution carries profound implications. Each incremental demonstration of predictive safety builds public and regulatory trust in the FSD platform. Consistent, reliable reactions to emergency vehicles are a fundamental benchmark for licensing higher levels of automation. For owners, it translates to a tangible increase in real-world safety, offering protection not just from their own errors but from the urgency of others on the road. As these systems improve and such events become commonplace, they strengthen the value proposition of Tesla's software, moving it from a driver-assist novelty toward an essential, life-saving feature. The road to full autonomy is long, but moments where the car "hears" danger before you do represent meaningful milestones on that journey.